Overview

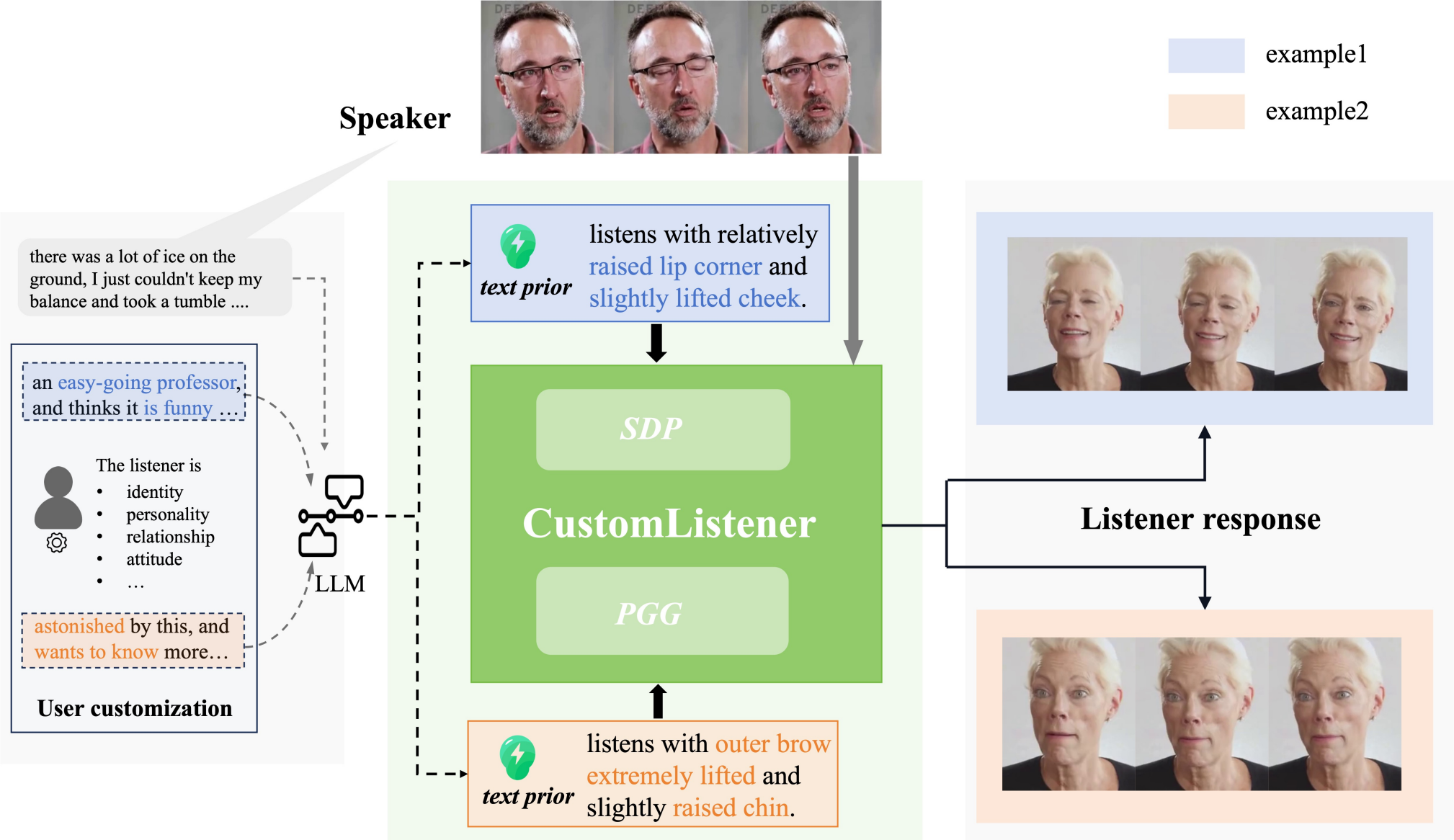

Given user-customized texts, a speaker video, and a listener portrait image, our system can generate a listener video with natural facial motions (pose, expression).

Listening head generation aims to synthesize a non-verbal responsive listener head by modeling the correlation between the speaker and the listener in dynamic conversion. The applications of listener agent generation in virtual interaction have promoted many works achieving the diverse and fine-grained motion generation. However, they can only manipulate motions through simple emotional labels, but cannot freely control the listener's motions. Since listener agents should have human-like attributes (e.g. identity, personality) which can be freely customized by users, this limits their realism. In this paper, we propose a user-friendly framework called CustomListener to realize the free-form text prior guided listener generation. To achieve speaker-listener coordination, we design a Static to Dynamic Portrait module (SDP), which interacts with speaker information to transform static text into dynamic portrait token with completion rhythm and amplitude information. To achieve coherence between segments, we design a Past Guided Generation Module (PGG) to maintain the consistency of customized listener attributes through the motion prior, and utilize a diffusion-based structure conditioned on the portrait token and the motion prior to realize the controllable generation. To train and evaluate our model, we have constructed two text-annotated listening head datasets based on ViCo and RealTalk, which provide text-video paired labels. Extensive experiments have verified the effectiveness of our model.

As shown in Video4, without SDP module, the model can only generate constant motions (always smile in this scenario). After incorporating SDP module, the generated listener motions can not only fluctuate with the speaker's semantics and movement amplitude (i.e. enhanced speaker-listener coordination), but also achieve progressive motion changes.

As shown in Video5, our proposed PGM module has two benefits: Firstly, with PGM module, the generated listener motions will be more coherent between adjacent segments. Furthermore, the PGM module can also help maintain past behavioral habits (e.g. frowning in the last sample of Video5).